Understanding measures and metrics

Measures and metrics used in SonarQube to evaluate your code.

Metrics are used to measure:

Security, maintainability, and reliability attributes on the basis of statistics on the detected security, maintainability, and reliability issues, respectively.

Test coverage on the basis of coverage statistics on executable lines and evaluated conditions.

Code cyclomatic and cognitive complexities.

Security review level on the basis of statistics on reviewed security hotspots.

Metrics also include statistics on:

Duplicated lines and blocks.

Code size (the number of various code elements).

Issues.

Finally, metrics also include the quality gate status result.

A metric refers to either new code or overall code. Most metrics can be used to define the quality gate conditions.

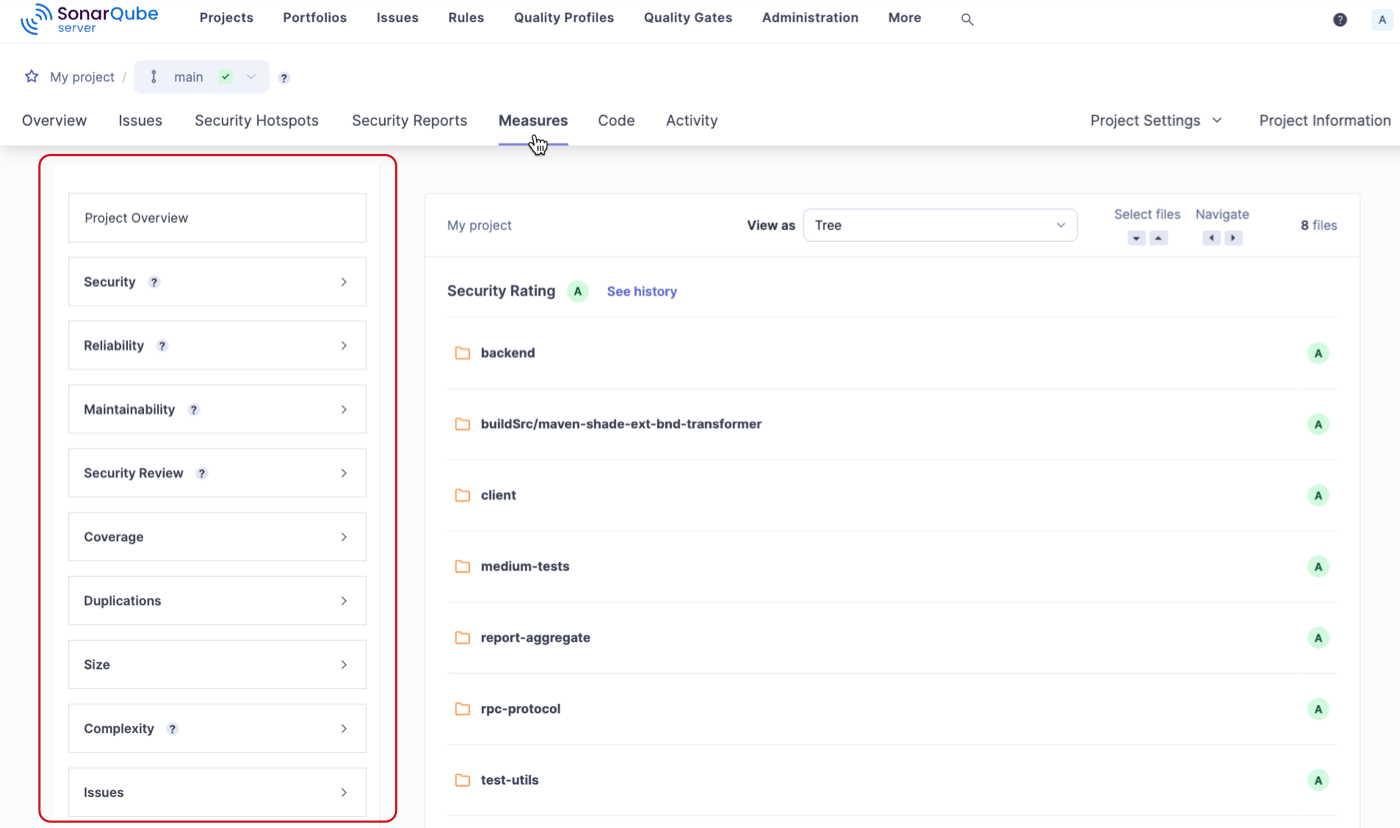

You can find these metrics in the Measures tab of your projects and portfolios.

You can retrieve the metrics through the Web API by using the metric key.

Security

A list of security metrics used in the Sonar solution. See Security-related rules for more information.

Security issues

software_quality_security_issues

The total number of issues impacting security.

Security issues on new code

new_software_quality_security_issues

The total number of security issues raised for the first time on new code.

Security rating

software_quality_security_rating

Rating related to security. The rating grid is as follows: A = 0 or more info issues B = at least one low issue C = at least one medium issue D = at least one high issue E = at least one blocker issue

Security rating on new code

new_software_quality_security_rating

Rating related to security on new code.

Security remediation effort

software_quality_security_remediation_effort

The effort to fix all vulnerabilities. The remediation cost of an issue is taken over from the effort (in minutes) assigned to the rule that raised the issue (see Technical debt in the Maintainability section).

An 8-hour day is assumed when values are shown in days.

Security remediation effort on new code

new_software_quality_security_remediation_effort

The same as Security remediation effort but on new code.

Vulnerabilities

vulnerabilities

The total number of vulnerabilities.

Vulnerabilities on new code

new_vulnerabilities

The total number of vulnerabilities raised for the first time on new code.

Security rating

security_rating

Rating related to security. The rating grid is as follows: A = 0 vulnerability B = at least one minor vulnerability C = at least one major vulnerability D = at least one critical vulnerability E = at least one blocker vulnerability

Security rating on new code

new_security_rating

Rating related to security on new code.

Security remediation effort

security_remediation_effort

The effort to fix all vulnerabilities. The remediation cost of an issue is taken over from the effort (in minutes) assigned to the rule that raised the issue (see Technical debt in the Maintainability section).

An 8-hour day is assumed when values are shown in days.

Security remediation effort on new code

new_security_remediation_effort

The same as Security remediation effort but on new code.

Reliability

A list of Reliability metrics used in the Sonar solution.

Reliability issues

software_quality_reliability_issues

The total number of issues impacting reliability.

Reliability issues on new code

new_software_quality_reliability_issues

The total number of reliability issues raised for the first time on new code.

Reliability rating

software_quality_reliability_rating

Rating related to reliability. The rating grid is as follows:

A = 0 or more info issues B = at least one low issue

C = at least one medium issue

D = at least one high issue

E = at least one blocker issue

Reliability rating on new code

new_software_quality_reliability_rating

Rating related to reliability on new code.

Reliability remediation effort

software_quality_reliability_remediation_effort

The effort to fix all reliability issues. The remediation cost of an issue is taken over from the effort (in minutes) assigned to the rule that raised the issue. An 8-hour day is assumed when values are shown in days.

Reliability remediation effort on new code

new_software_quality_reliability_remediation_effort

The same as Reliability remediation effort but on new code.

Bugs

bugs

The total number of bugs.

Bugs on new code

new_bugs

The total number of bugs raised for the first time on new code.

Reliability rating

reliability_rating

Rating related to reliability. The rating grid is as follows:

A = 0 bug

B = at least one minor bug

C = at least one major bug

D = at least one critical bug

E = at least one blocker bug

Reliability rating on new code

new_reliability_rating

Rating related to reliability on new code.

Reliability remediation effort

reliability_remediation_effort

The effort to fix all reliability issues. The remediation cost of an issue is taken over from the effort (in minutes) assigned to the rule that raised the issue. An 8-hour day is assumed when values are shown in days.

Reliability remediation effort on new code

new_reliability_remmediation_effort

The same as Reliability remediation effort but on new code.

Maintainability

A list of Maintainability metrics used in the Sonar solution.

Maintainability issues

software_quality_maintainability_issues

The total number of issues impacting maintainability.

Maintainability issues on new code

new_software_quality_maintainability_issues

The total number of maintainability issues raised for the first time on new code.

Technical debt

software_quality_maintainability_remediation_effort

A measure of effort to fix all maintainability issues. See below.

Technical debt on new code

new_software_quality_maintainability_remediation_effort

A measure of effort to fix the maintainability issues raised for the first time on new code.

Technical debt ratio

software_quality_maintainability_debt_ratio

The ratio between the cost to develop the software and the cost to fix it. See below.

Technical debt ratio on new code

new_software_quality_maintainability_debt_ratio

The ratio between the cost to develop the code changed on new code and the cost of the issues linked to it. See below.

Maintainability rating

software_quality_maintainability_rating

The rating related to the value of the technical debt ratio. See below.

Maintainability rating on new code

new_software_quality_maintainability_rating

The rating related to the value of the technical debt ratio on new code. See below.

Code smells

code_smells

The total number of code smells.

Code smells on new code

new_code_smells

The total number of code smells raised for the first time on new code.

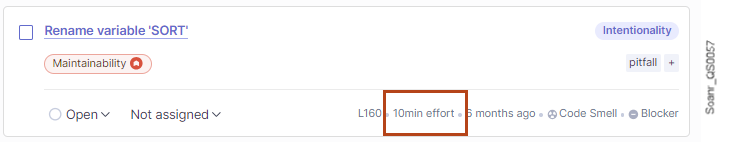

Technical debt

sqale_index

A measure of effort to fix all code smells.

Technical debt on new code

new_technical_debt

A measure of effort to fix the code smells raised for the first time on new code.

Technical debt ratio

sqale_debt_ratio

The ratio between the cost to develop the software and the cost to fix it.

Technical debt ratio on new code

new_sqale_debt_ratio

The ratio between the cost to develop the code changed on new code and the cost of the issues linked to it.

Maintainability rating

sqale_rating

The rating related to the value of the technical debt ratio.

Maintainability rating on new code

new_squale _rating

The rating related to the value of the technical debt ratio on new code.

Technical debt

The technical debt is the sum of the maintainability issue remediation costs. An issue remediation cost is the effort (in minutes) evaluated to fix the issue. It is taken over from the effort assigned to the rule that raised the issue.

An 8-hour day is assumed when the technical debt is shown in days.

Technical debt ratio

The technical debt ratio is the ratio between the cost to fix the software (known as Technical debt) and the cost to develop the software. It is calculated based on the following formula:

sqale_debt_ratio = technical debt /(cost to develop one line of code * number of lines of code)

Where the cost to develop one line of code is predefined in the database (by default, 30 minutes, can be changed, see Code metrics).

Example:

Technical debt: 122,563

Number of lines of code: 63,987

Cost to develop one line of code: 30 minutes

Technical debt ratio: 6.4%

See the LOC definition to understand what is considered a line of code.

Maintainability rating

The default Maintainability rating scale (sqale_rating) is:

A ≤ 5% to 0%

B ≥ 5% to <10%

C ≥ 10% to <20%

D ≥ 20% to < 50%

E ≥ 50%

You can define another maintainability rating grid: see Changing the maintainability rating grid.

Security review

A list of security review metrics used in the Sonar solution. See Managing Security Hotspots for more information.

Security hotspots

security_hotspots

The number of security hotspots.

Security hotspots on new code

new_security_hotspots

The number of security hotspots on new code.

Security hotspots reviewed

security_hotspots_reviewed

The percentage of reviewed security hotspots compared in relation to the total number of security hotspots.

New security hotspots reviewed

new_security_hotspots_reviewed

The percentage of reviewed security hotspots on new code.

Security review rating

security_review_rating

The security review rating is a letter grade based on the percentage of reviewed security hotspots. Note that security hotspots are considered reviewed if they are marked as Acknowledged, Fixed, or Safe.

The rating grid is as follows: A = >= 80% B = >= 70% and <80% C = >= 50% and <70% D = >= 30% and <50% E = < 30%

Security review rating on new code

new_security_review_rating

The security review rating for new code.

Security hotspots

security_hotspots

The number of security hotspots.

Security hotspots on new code

new_security_hotspots

The number of security hotspots on new code.

Security hotspots reviewed

security_hotspots_reviewed

The percentage of reviewed security hotspots compared in relation to the total number of security hotspots.

New security hotspots reviewed

new_security_hotspots_reviewed

The percentage of reviewed security hotspots on new code.

Security review rating

security_review_rating

The security review rating is a letter grade based on the percentage of reviewed security hotspots. Note that security hotspots are considered reviewed if they are marked as Acknowledged, Fixed, or Safe.

The rating grid is as follows: A = >= 80% B = >= 70% and <80% C = >= 50% and <70% D = >= 30% and <50% E = < 30%

Security review rating on new code

new_security_review_rating

The security review rating for new code.

Coverage

A list of coverage metrics used in the Sonar solution. See Test coverage Overview page for more information.

Coverage

coverage

A mix of line coverage and condition coverage. Its goal is to provide an even more accurate answer to the question:

How much of the source code has been covered by unit tests?

coverage = (CT + LC)/(B + EL)

where:

CT: conditions that have been evaluated to true at least once

LC: covered lines = lines_to_cover - uncovered_lines

B: total number of conditions

EL: total number of executable lines (lines_to_cover)

Coverage on new code

new_coverage

This definition is identical to coverage but is restricted to new or updated source code.

Lines to cover

lines_to_cover

Coverable lines. The number of lines of code that could be covered by unit tests, for example, blank lines or full comments lines are not considered as lines to cover. Note that this metric is about what is possible, not what is left to do - that’s uncovered lines.

Lines to cover on new code

new_lines_to_cover

This definition is identical to lines to cover but restricted to new or updated source code.

Uncovered lines

uncovered_lines

The number of lines of code that are not covered by unit tests.

Uncovered lines on new code

new_uncovered_lines

This definition is identical to uncovered lines but restricted to new or updated source code.

Line coverage

line_coverage

On a given line of code, line coverage simply answers the question:

Has this line of code been executed during the execution of the unit tests?

It is the density of covered lines by unit tests:

line_coverage = LC / EL

where:

LC = covered lines = lines_to_cover - uncovered_lines

EL = total number of executable lines (lines_to_cover)

Line coverage on new code

new_line_coverage

This definition is identical to line coverage but restricted to new or updated source code.

Line coverage hits

coverage_line_hist_data

A list of covered lines.

Condition coverage

branch_coverage

The condition coverage answers the following question on each line of code containing boolean expressions:

Has each boolean expression been evaluated both to true and to false?

This is the density of possible conditions in flow control structures that have been followed during unit tests execution.

branch_coverage = (CT + CF) / (2*B)

where:

CT = conditions that have been evaluated to true at least once

CF = conditions that have been evaluated to false at least once

B = total number of conditions

Condition coverage on new code

new_branch_coverage

This definition is identical to condition coverage but is restricted to new or updated source code.

Condition coverage hits

branch_coverage_hits_data

A list of covered conditions.

Conditions by line

conditions_by_line

The number of conditions by line.

Covered conditions by line

covered_conditions_by_line

Number of covered conditions by line.

Uncovered conditions

uncovered_conditions

The number of conditions that are not covered by unit tests.

Uncovered conditions on new code

new_uncovered_conditions

This definition is identical to Uncovered conditions but restricted to new or updated source code.

Unit tests

tests

The number of unit tests.

Unit test errors

test_errors

The number of unit tests that have failed.

Unit test failures

test_failures

The number of unit tests that have failed with an unexpected exception.

Skipped unit tests

skipped_tests

The number of skipped unit tests.

Unit tests duration

test_execution_time

The time required to execute all the unit tests.

Unit test success density (%)

test_success_density

test_success_density = (tests - (test_errors + test_failures)) / (tests) * 100

Duplications

A list of duplication metrics used in the Sonar solution.

Duplication detection is not supported for Terraform and similar IaC languages, Dart, and CSS.

Duplicated lines density (%)

duplicated_lines_density

Duplicated lines density is calculated by using the following formula:

duplicated_lines_density= duplicated_lines / lines * 100

Duplicated lines density (%) on new code

new_duplicated_lines_density

The same as duplicated lines density but on new code.

Duplicated lines

duplicated_lines

The number of lines involved in duplications.

Duplicated lines on new code

new_duplicated_lines

The number of lines involved in duplications on new code.

Duplicated blocks

duplicated_blocks

The number of duplicated blocks of lines.

For a block of code to be considered as duplicated, for Non-Java projects:

There should be at least 100 successive and duplicated tokens.

Those tokens should be spread at least on:

30 lines of code for COBOL

20 lines of code for ABAP

10 lines of code for other languages

for Java projects:

There should be at least 10 successive and duplicated statements whatever the number of tokens and lines.

Differences in indentation and in string literals are ignored while detecting duplications.

Duplicated block on new code

new_duplicated_blocks

The number of duplicated blocks of lines on new code.

Duplicated files

duplicated_files

The number of files involved in duplications.

Size

A list of size metrics used in the Sonar solution.

New lines

new_lines

The number of physical lines on new code (number of carriage returns).

Lines of code

ncloc

The number of physical lines that contain at least one character which is neither a whitespace nor a tabulation nor part of a comment.

Lines

lines

The number of physical lines (number of carriage returns).

Statements

statements

The number of statements.

Functions

functions

The number of functions. Depending on the language, a function is defined as either a function, a method, or a paragraph. Language-specific details:

COBOL: It’s the number of paragraphs.

Dart: Any function expression is included, whether it’s the body of a function declaration, of a method, constructor, getter, top-level or nested function, top-level or nested lambda.

Java: Methods in anonymous classes are ignored.

VB.NET: Accessors are not considered to be methods.

Classes

classes

The number of classes (including nested classes, interfaces, enums, annotations, mixins, extensions, and extension types).

Files

files

The number of files.

Comment lines

comment_lines

The number of lines containing either comment or commented-out code. See below for calculation details.

Comments (%)

comment_lines_density

The comment lines density. It is calculated based on the following formula: comment_lines_density=[comment_lines / (lines + comment_lines)] * 100

Examples:

50% means that the number of lines of code equals the number of comment lines.

100% means that the file only contains comment lines.

Lines of code per language

ncloc_language_distribution

The non-commented lines of code distributed by language.

Projects

projects

The number of projects in a portfolio.

Complexity

Complexity metrics used in the Sonar solution.

Cyclomatic complexity

complexity

A quantitative metric used to calculate the number of paths through the code.

Cognitive complexity

cognitive_complexity

A qualification of how hard it is to understand the code’s control flow. See Cyclomatic complexity: developer's guide for more information or sign up to download Cognitive Complexity white paper for a complete description of the mathematical model applied to compute this measure.

Cyclomatic complexity

Cyclomatic complexity is a quantitative metric used to calculate the number of paths through the code. The analyzer calculates the score of this metric for a given function (depending on the language, it may be a function, a method, a subroutine, etc.) by incrementing the function’s cyclomatic complexity counter by one each time the control flow of the function splits resulting in a new conditional branch. Each function has a minimum complexity of 1. The calculation formula is as follows:

Cyclomatic complexity = 1 + number of conditional branches

The calculation of the overall code’s cyclomatic complexity is basically the sum of all complexity scores calculated at the function level. In some languages, the complexity of external functions is additionally taken into account.

Note that function-level complexity scores cannot be viewed directly in SonarQube, they are only used to calculate the overall code's cyclomatic complexity.

Split detection by language.

ABAP

The ABAP analyzer calculates the cyclomatic complexity at the function level. It increments the cyclomatic complexity by one each time it detects one of the following keywords:

ANDCATCHDOELSEIFIFLOOPLOOPATORPROVIDESELECT…ENDSELECTTRYWHENWHILE

C/C++/Objective-C

The C/C++/Objective-C analyzer calculates the cyclomatic complexity at function and coroutine levels. It increments the cyclomatic complexity by one each time it detects:

A control statement such as:

if,while,do while,forA switch statement keyword such as:

case,defaultThe

&&and||operatorsThe

?ternary operatorA lambda expression definition

Each time the analyzer scans a header file as part of a compilation unit, it computes the measures for this header: statements, functions, classes, cyclomatic complexity, and cognitive complexity. That means that each measure may be computed more than once for a given header. In that case, it stores the largest value for each measure.

C#

The C# analyzer calculates the cyclomatic complexity at method and property levels. It increments the cyclomatic complexity by one each time it detects:

one of these function declarations: method, constructor, destructor, property, accessor, operator, or local function declaration.

A conditional expression

A conditional access

A switch case or switch expression arm

An and/or pattern

One of these statements:

do,for,foreach,if,whileOne of these expressions:

??,??=,||, or&&

COBOL

The COBOL analyzer calculates the cyclomatic complexity at paragraph, section, and program levels. It increments the cyclomatic complexity by one each time it detects one of these commands (except when they are used in a copybook):

ALSOALTERANDDEPENDINGEND_OF_PAGEENTRYEOPEXCEPTIONEXEC CICS HANDLEEXEC CICS LINKEXEC CICS XCTLEXEC CICS RETURNEXITGOBACKIFINVALIDOROVERFLOWSIZESTOPTIMESUNTILUSEVARYINGWHEN

Dart

The Dart analyzer calculates the cyclomatic complexity for:

top-level functions

top-level function expressions (lambdas)

methods

accessors (getters and setters)

constructors

It increments the complexity by one for each of the structures listed above. It doesn’t increment the complexity for nested function declarations or expressions.

In addition, the count is incremented by one for each:

short-circuit binary expression or logical patterns (

&&,||,??)if-null assignments (

??=)conditional expressions (

?:)null-aware operators (

?[,?.,?..,...?)propagating cascades (

a?..b..c)ifstatement or collectionloop (

for,while,do, andforcollection)

case or pattern in a switch statement or expression

Java

The Java analyzer calculates the cyclomatic complexity at the method level. It increments the Cyclomatic complexity by one each time it detects one of these keywords:

ifforwhilecase&&||?->

JS/TS, PHP

The JS/TS analyzer calculates the cyclomatic complexity at the function level. The PHP analyzer calculates the cyclomatic complexity at the function and class levels. Both analyzers increment the cyclomatic complexity by one each time they detect:

A function (i.e non-abstract and non-anonymous constructors, functions, procedures or methods)

An

ifor (for PHP)elsifkeywordA short-circuit (AKA lazy) logical conjunction (

&&)A short-circuit (AKA lazy) logical disjunction (

||)A ternary conditional expression

A loop

A

caseclause of aswitchstatementA

throwor acatchstatementA

gotostatement (only for PHP)

PL/I

The PL/I analyzer increments the cyclomatic complexity by one each time it detects one of the following keywords:

PROCPROCEDUREGOTOGO TODOIFWHEN|!|=!=&&=A

DOstatement with conditions (Type 1DOstatements are ignored)

For procedures having more than one return statement: each additional return statement except for the last one, will increment the complexity metric.

PL/SQL

The PL/SQL analyzer calculates the cyclomatic complexity at the function and procedure level. It increments the cyclomatic complexity by one each time it detects:

The main PL/SQL anonymous block (not inner ones)

One of the following statements:

CREATE PROCEDURECREATE TRIGGERbasic

LOOPWHENclause (theWHENof simpleCASEstatement and searchedCASEstatement)cursor

FOR LOOPCONTINUE/EXIT WHENclause (TheWHENpart of theCONTINUEandEXITstatements)exception handler (every individual

WHEN)EXITFORLOOPFORALLIFELSIFRAISEWHILELOOP

One of the following expressions:

ANDexpression (ANDreserved word used within PL/SQL expressions)ORexpression (ORreserved word used within PL/SQL expressions),WHENclause expression (theWHENof simpleCASEexpression and searchedCASEexpression)

VB.NET

The VB.NET analyzer calculates the cyclomatic complexity at function, procedure, and property levels. It increments the cyclomatic complexity by one each time it detects:

a method or constructor declaration (

Sub,Function),AndAlsoCaseDoEndErrorExitForForEachGoToIf

LoopOn ErrorOrElseResumeStopThrowTryWhile

Issues

A list of issues metrics used in the Sonar solution. See the Issues Introduction page for more information.

Issues

violations

The number of issues in all states.

Issues on new code

new_violations

The number of issues raised for the first time on new code.

Open issues

open_issues

The number of issues in the Open status.

Accepted issues

accepted_issues

The number of issues marked as Accepted.

Accepted issues on new code

new_accepted_issues

The number of Accepted issues on new code.

False positive issues

false_positive_issues

The number of issues marked as False positive.

Blocker severity issues

software_quality_blocker_issues

Issues with a Blocker severity level.

High severity issues

software_quality_high_issues

Issues with a High severity level.

Medium severity issues

software_quality_medium_issues

Issues with a Medium severity level.

Low severity Issues

software_quality_low_issues

Issues with a Low severity level.

Info severity issues

software_quality_info_issues

Issues with an Info severity level.

Issues

violations

The number of issues in all states.

Issues on new code

new_violations

The number of issues raised for the first time on new code.

Accepted issues

accepted_issues

The number of issues marked as Accepted.

Open issues

open_issues

The number of issues in the Open status.

Accepted issues on new code

new_accepted_issues

The number of Accepted issues on new code.

False positive issues

false_positive_issues

The number of issues marked as False positive.

Blocker issues

blocker_violations

Issues with a Blocker severity level.

Critical issues

critical_violations

Issues with a Critical severity level.

Major issues

major_violations

Issues with a Major severity level.

Minor issues

minor_violations

Issues with a Minor severity level.

Info issues

info_violations

Issues with an Info severity level.

Severity

A lists of severity levels used in the Sonar solution. See Severity at the software quality level for more information.

Blocker

An issue that has a significant probability of severe unintended consequences on the application that should be fixed immediately. This includes bugs leading to production crashes and security flaws allowing attackers to extract sensitive data or execute malicious code.

High

An issue with a high impact on the application that should be fixed as soon as possible.

Medium

An issue with a medium impact.

Low

An issue with a low impact.

Info

There is no expected impact on the application. For informational purposes only.

Blocker

An issue that has a significant probability of severe unintended consequences on the application that should be fixed immediately. This includes bugs leading to production crashes and security flaws allowing attackers to extract sensitive data or execute malicious code.

Critical

An issue with a critical impact on the application that should be fixed as soon as possible.

Major

An issue with a major impact on the application.

Minor

An issue with a minor impact on the application.

Info

There is no expected impact on the application. For informational purposes only.

Quality gates

Quality gates metrics used in the Sonar solution. See Understanding quality gates for more information.

Quality gate status

alert_status

The state of the quality gate associated with your project. Possible values are ERROR and OK.

Quality gate details

quality_gate_details

Status (passed or failed) of each condition in the quality gate.

Advanced security (SCA)

Advanced security (SCA) metrics used in the Sonar solution. Advanced security is available as an add-on starting in Enterprise.

SCA metrics are not shown in the user interface for portfolios at this time.

SCA issue threshold

sca_count_any_issue

The total number of dependency risks.

SCA issue threshold on new code

new_sca_count_any_issue

The total number of dependency risks raised for the first time on new code.

SCA severity threshold

sca_severity_any_issue

Indicates whether there is any dependency risk at or above the specified severity.

SCA severity threshold on new code

new_sca_severity_any_issue

Indicates whether there is any dependency risk at or above the specified severity raised for the first time on new code.

SCA vulnerability threshold

sca_severity_vulnerability

Indicates whether there is any vulnerability dependency risk at or above the specified severity.

SCA vulnerability threshold on new code

new_sca_severity_vulnerability

Indicates whether there is any vulnerability dependency risk at or above the specified severity raised for the first time on new code.

SCA licensing risk threshold

sca_severity_licensing

Indicates whether there is any license dependency risk at or above the specified severity.

Note: License risks are currently always HIGH severity. If this parameter is set to BLOCKER, no license risk will fail the quality gate.

SCA licensing risk threshold on new code

new_sca_severity_licensing

Indicates whether there is any license dependency risk at or above the specified severity raised for the first time on new code.

Note: License risks are currently always HIGH severity. If this parameter is set to BLOCKER, no license risk will fail the quality gate.

SCA rating threshold

sca_rating_any_issue

Rating related to dependency risks. The rating grid is as follows:

A = 0 or more info risks

B = at least one low risks

C = at least one medium risk

D = at least one high risk

E = at least one blocker risk

SCA rating threshold on new code

new_sca_rating_any_issue

Rating related to dependency risks in new code.

SCA vulnerability rating threshold

sca_rating_vulnerability

Rating related to vulnerability risks.

SCA vulnerability rating threshold on new code

new_sca_rating_vulnerability

Rating related to vulnerability risks in new code.

SCA license rating threshold

sca_rating_licensing

Rating related to dependency licenses. License risks always have a rating of D. so using a threshold of E will not fail the quality gate.

SCA license rating threshold on new code

new_sca_rating_licensing

Rating related to dependency licenses in new code.

Related pages

Related online learning

Last updated

Was this helpful?

Comment lines

Non-significant comment lines (empty comment lines, comment lines containing only special characters, etc.) do not increase the number of comment lines.

The following piece of code contains 9 comment lines:

In addition:

For COBOL: Generated lines of code and pre-processing instructions (SKIP1, SKIP2, SKIP3, COPY, EJECT, REPLACE) are not counted as lines of code.

For Java and Dart: File headers are not counted as comment lines (because they usually define the license).